CS396 Advanced Computer Graphics Seminar: VR/AR Systems

Lecture Info

Tue/Thur 3:30PM - 5:00PM CST

June 20th - Aug 27th, 2023

M345, Tech Building, Northwestern University

Teaching Team

Course Instructor:

Jipeng Sun, Northwestern University

Course Assistant:

Ashwin Baluja, Northwestern University

Yiran Zhang, Northwestern University

Jiahui Li, Northwestern University

Course Coordinator:

Prof. Jack Tumblin, Northwestern University

Introduction

Welcome to CS396 VR/AR Systems!

Reflecting how much time you spend looking at screens in a day, there is no doubt the physical world and digital world are connecting more and more deeply with each other for better increasing the social productivity and satisifying humans‘ nature needs for curiosity, respect, autonomy, and purpose. Virtual Reality (VR) and Augmentented Reality (AR), by merging the digital world and physical world in a more convenient and profound way, is the next digital world medium for unlocking the greater power of human beings’ productivity in the physical world.

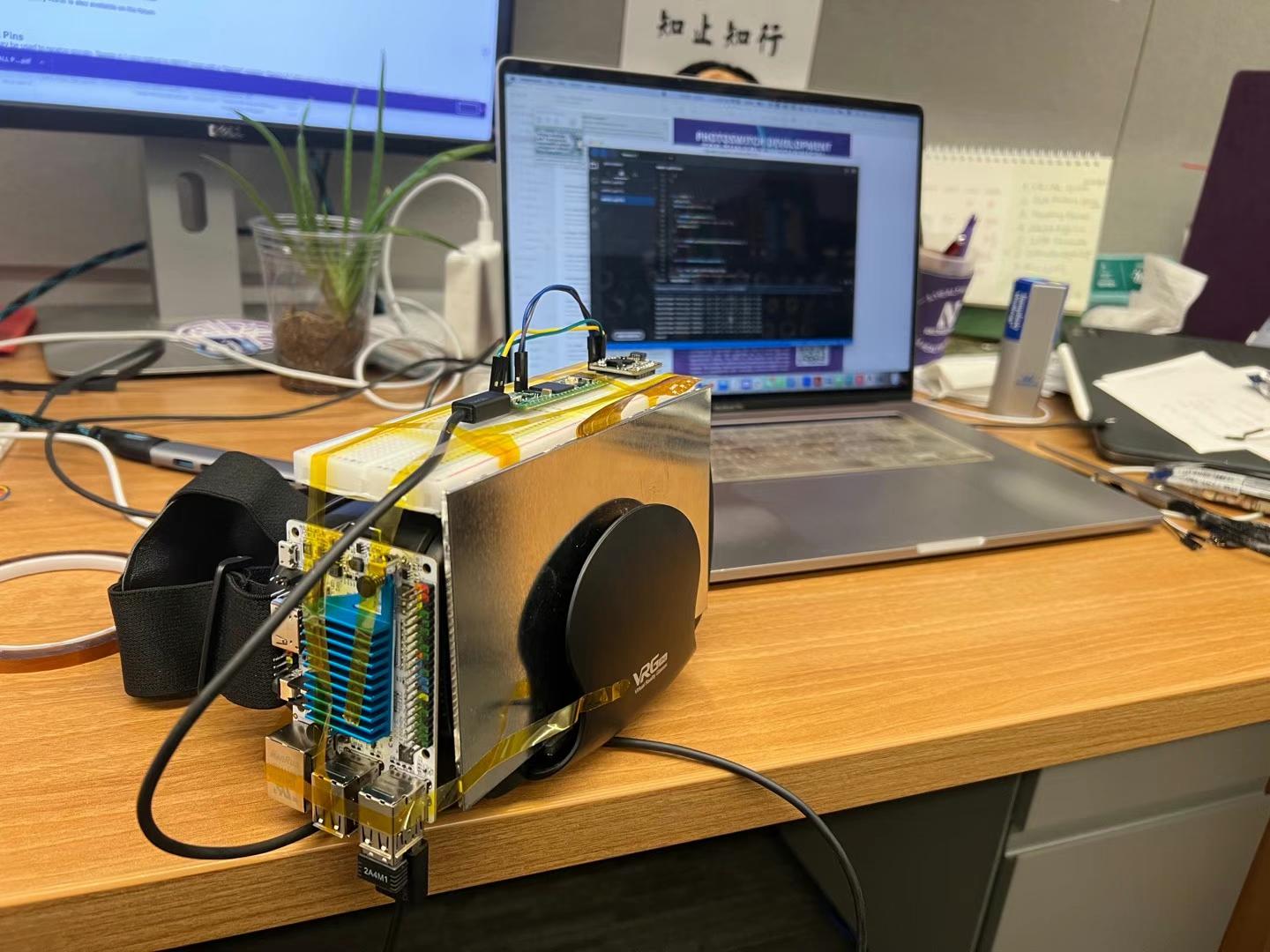

In this course, students will learn how to build a standalone VR hardware & software prototype from scratch in 10 weeks and explore the possibility to extend it to a LLM assisted VR / edge AI AR system. By learning from building, we can better understand the principles, craftsmanship and subtleties behind creating a real world VR/AR product.

Course Summary Notes from Ashwin Baluja

Syllabus

Week 1: Introduction to VR/AR Systems

Key questions to ask

-

What really makes a VR VR?

-

What is the computational process of transforming a 3D scene to what displayed to your eyes?

Introduction to VR/AR systems and basic graphics pipelines.

Week 2: Design VR Systems from Scratch

Key questions to ask

-

What is the minimum viable product (MVP) for a VR?

-

How could we turn a passion/dream into a workable engineering plan?

-

What are the tradeoffs for different design plans?

VR design plans brainstorming. There are no ‘correct’ answers for engineering. The problem we are facing is: ‘Driven by your passion/goal, given limited resources, knowing what your metrics to evaluate success, how to make your best possible plan and act on it.’ Come up with a step-by-step hardware prototyping iteration plan. Start sourcing the hardware components based on your design.

Brainstorm Recording Notes from Ashwin Baluja

Week 3: Graphics Rendering Pipeline on Emebedded Systems

Key questions to ask

-

Given a designed MVP, which is a near eye monitor, how to draw/render scenes on that screen?

-

What is the logic of the graphics rendering pipeline?

-

How to use WebGL to implement the rendering pipeline?

Week 4: Human Binocular Vision System

Key questions to ask

-

Will the current implemented binocular rendering effect work? Why won’t it give us a 3D effect?

-

What are the biological anatomy and functions of human binocular eyes?

-

How should we change our graphics rendering logic to fit human perception?

-

What are the depth cues for humans? How does the relative importance of them change based on the distance?

Week 5: Near-Eye Display Optics

Key questions to ask

-

To make it real, how to make a 2-cm far displayed image be perceived as 1.5-meter away from the users?

-

What are the popular optical components of the current near eye display? How do they work? What are the trade-offs?

-

Will the rendered images look distorted after adding a lens between the display and eyes? If so, how should we correct them?

-

Why will we feel dizzy wearing VR/AR glasses? What is the vergence & accommodation problem?

Week 6: Orientation/Position Tracking Hardware

Key questions to ask

-

How to match the perspective of the rendered scene with the actual head pose of the users?

-

How do humans design ‘inertial measurement unit’ (IMU) to measure the pose? What are the physical principles behind it?

-

How to turn the IMU reading value to control rotation?

Week 7: Build VR/AR Without Dizziness

Key questions to ask

-

What are the sources of VR/AR dizziness?

-

What is the vergence accommodation conflict? Why is it a problem?

-

How could we solve/alleviate the dizziness problem caused by vergence & accommodation conflict?

-

How to analyze the latency of the current system?

-

What are the graphics and hardware approaches to cut down the latency?

Week 8: Controller Pose Estimation for Interaction

Key questions to ask

-

What are the human machine interfaces for VR/AR systems? How could we interact with VR/AR systems?

-

How does hand gesture recognition work? What is the general computer vision development pipeline?

-

How to add a camera into the current system?

Week 9: Additional VR Features: Audio, Eye Tracking, Cloud AI Interfaces, and Rendering Engines

Key questions to ask

-

Vision itself alone is not reality, what other sensory input could we control?

-

Why is eye tracking important for VR/AR systems? How do humans do eye tracking now?

-

How to leverage advanced graphics engine software to accelerate VR/AR creation?

-

How to export and migrate the graphics engine project into our DIY system?

-

How to leverage cloud AI interface to empower your system?

Week 10: State of Art VR/AR Research (Guest Lectures) and Demo Day

Key questions to ask

-

What are the current state of art VR/AR research projects?

-

What could I do next to start a VR/AR academia/industry job? How to step further?

-

Share and demo your project with people you care and love!